Tuesday, 16 December 2008

Sydney – Monday, 15 December

I really like the idea of commuting by ferry everywhere, so much more civilised and relaxed. Well that was until we got the ferry back from Manly and we hit the waves from the sea, the ferry was rolling around like a roller coaster (sea, sky, sea, sky….). Yep, Milly and Connor thought it was great – all I could do was look for the life jackets and rafts.

Sunday, 14 December 2008

Sydney – Sunday, 14 December

To compensate for being dragged around the market again I thought I’d do something educational, so we went to the Museum of Cotemporary Art (MCA) at Sydney Harbour – turns out they have it all set up for kids, Milly and Connor really enjoyed going around with clipboards making their own art and answering questions about the artwork.

Fish and chips at Doyles a fish restaurant right on the seafront of Watson Bay – superb food at a reasonable price. OK, maybe expensive by Australian standard, but well worth it. Connor thought the Snapper was a Piranha, first time the kids have had fish that came with heads on.

Saturday, 13 December 2008

Sydney – Saturday, 13 December

We’re staying in The Rocks district of Sydney with fantastic views of the Harbour Bridge and Opera house.

First stop of the day was breakfast, eat as much as you like for $19, this sounds expensive but we managed to get value for money out of it. I think its essential to get a good breakfast for a hard day ahead being a tourist :-)

First stop was the market, Connor looking for a boomerang and Milly to get jewellery or handbags. Sam decided to buy some traditional lemonade with Connor and Milly, kept them quiet for about 30 minutes because it was made with real lemon and I think it was seriously bitter.

Can’t tell what the highlight of the day for the kids was, they get excited about everything. Sydney Opera house in the morning, using Darling Harbour fountains and monuments as paddling pools in the afternoon. The use of any water feature seems to be an OK thing to do as everyone else was doing it.

Firework display in the evening ended the day off nicely.

Friday, 12 December 2008

Sydney – Friday, 12 December

Staff at Quay West Suites were really helpful and got our room prepared by 10am, I think the fact that we looked like 5 vagabonds camped out in the hotel lobby helped!

Just to make things seem really bad, the temperature was only 18 C, and it was raining (hard) all day. So other than trying to get some sleep and food we didn’t do too much.

Thursday, 11 December 2008

Singapore – Thursday, 11 December

For a brilliant eating experience try the Singapore International Convention Centre food court. We gave the kids $10 each and they went off and ordered what they wanted, real value for money. Although, you will need to like Asian food otherwise the choice would be limited.

Off to Australia!

Wednesday, 10 December 2008

Singapore – Wednesday, 10 December

I was expecting the humidity to be oppressive, but there was a nice breeze which helped. It's well worth paying for the ride around the park, you can just hop on and off, so when the kids get tired you can still get to the next part of the park.

Tuesday, 9 December 2008

Holiday, Singapore – Tuesday, 9 December

The evening meal took place at a food court, I would recommend a visit to Newton Circus food court – lots of authentic dishes and experiences. Probably the best fried rice anywhere.

Friday, 10 October 2008

September ends...it's all doom and gloom

I had a great time at Wembley Arena on the 21 Sept. The concert exceeded my expectations, I was amazed at the age range of people there and I was very glad I wasn't the the eldest.

I'm determined to keep a positive attitude through these very worrying times. Although I am not sure it's going to be easy. Still on the really positive side I have a holiday in Australia to look forward to. I also keep reminding myself that it is times like this when some of the best opportunities arise.

I still seem to be racking up the air miles, Washington in September and off to Boston again next week. I'll need my holiday in Australia after this year.

Tuesday, 7 October 2008

Why does AltioLive have its own IDE?

An IDE is one of the richest forms of user interface.

Back in 1999 the original reason for having an Altio IDE was that there was no dominant IDE in the market place, and show casing your products capabilities in an IDE was a good way of demonstrating what can be achieved.

Being a Java based product it could now be argued that AltioLive applications should be editable using common IDE's, such as Eclipse and NetBeans. This still does not remove the fact that if a tool is so good then why isn't the IDE written using its own base language. AltioLive is a tool for creating Rich Web Applications so its IDE should be a rich web application.

The Altio team is now striving to deliver a framework that can be used by a broad spectrum of people with varying software skills. At present we lie somewhere between a development tool and a power user tool. AltioLive will appeal to:

- software developers with little experience of creating user interfaces and do not want to understand the complexities of the Java Swing API's. But, the solution they may want to deliver requires a highly scaleable and rich user interface within the web browser.

- experienced Java developers that want to build their own user interface controls but do not want to build a whole integration framework to the server. They want 80% of the work done for them so that they can get on with the specific needs of the project.

- consultants who want the ability to deliver a rich web application that has security, server push data delivery, reusability and most of all the ability to deliver a user interface solution as quickly as possible.

- designers, and business analysts who want to be able to define a user interface and possibly implement early prototypes without the need to rely upon a development team early in the project.

The above points are not an extensive list, AltioLive is used as an OEM tool within larger products to allow the development of highly customisable, maintainable user interfaces. It is also used within financial institutes to deliver very rich and interactive user interfaces where data is pushed from the server to multiple users.

AltioLive applications can be edited within Eclipse or a simple text editor if you really want to, after all the underlying logic and screen definition are written to XML files in a declarative language. Many of our competitors take this approach now, the strength of Altio is WYSIWYG, you build the user interface interactively.

The bottom line is that I want to see Altio deliver a simple to use application development tool that can be used by someone with the most basic programming knowledge. This in turn should mean the performance of project teams in increased, thus increasing the chances of delivering IT projects at cost and on time.

originally posted to www.altio.com

Monday, 22 September 2008

Design Methodologies - where do you want to spend your money?

I recently revisited articles on design methodologies, specifically TOGAF (The Open Group Architecture Framework) and Agile methodologies. Initially, I thought that both approaches contradict each other and so could not be used together. However, like writing software you combine best practices from different methodologies that meet your project requirements, improving the chances of a successful project.

I'm not going to try and pretend to be a TOGAF professional, I have an awareness of the framework and have never used it end to end. I believe that if you are going to design software then you should at least be aware of frameworks such as TOGAF and alternatives. A good start on agile is Martin Fowler "The New Methodology" and "Can SOA be done with an agile approach".

TOGAF combined with Zachman framework is a top down design methodology and provides an excellent mechanism for eliciting requirements and providing a framework for communication in a common language, but as Fowler states big up front design can become very expensive. Also, it can lead a project to not deliver what is required by the business when the system goes live, rather it delivers what was required initially. The difference may well be dramatic.

A good reason for top down design is to do with procurement and the need to identify appropriate commercial products to use, a focus of TOGAF. Large organisations want to outsource at fixed price and the vendor organisation doing the work wants all the details up front to have the best chance of delivering the project at a profit. Again, Fowler makes a good point about the "Adaptive Customer" and how customers need to be adaptive in the way they engage with vendors. Although from my experience of being both the vendor and using vendors to do work there is never an ideal approach. A vendor wants to make as much money as possible while the customer wants to control the scope and spend as little as possible.

So where do you want to spend your money?

Up front with lots of design, then on lots of change requests and the end result will be lots of documentation that in theory should reduce the maintenance overheads, keeping the future costs down.

The alternative is relying upon highly skilled and talented software engineers who may or may not use a silver bullet, document the code or write any design material thus making maintenance harder for someone un-familiar with the code.

I have seen both top down and bottom up methodologies succeed and fail to varying degrees. It is my opinion that success is based upon good judgement and adaptability at the beginning and during the project on the part of everyone involved.

Combining the requirement analysis and control features of TOGAF with an Agile methodology, and the use of PRINCE project management looks like a good combination as long as you are adaptable in selecting the parts that make most sense for the project you are working on.

Thursday, 4 September 2008

Where does the time go...and technology extremism

Where did the time go... Wow! It's September and I can't actually think what happened to July and August, well I can and it's been a frenzy of work. For a number of years now I've worked on the basis that during my work year there are two quiet periods - August and December. These quiet times are when I can sit down and do all the little things I just don't have time to do during the rest of the year, one example is looking at a new programming language or technology trend in detail.

A quiet August didn't happen this year. I spent some of August cutting code, and that actually felt good as I haven't had to do real code for quite a while. When I say real code I mean the stuff that has to go into source control and be tested.

July and the rest of August have been consumed by roadmap and technology discussions, talking to existing and prospective clients. The discussions about system architecture and the critical analysis of technologies and how they should be applied are what I enjoy most. These discussions lead to a lot of creative thinking and there is certainly a lot of that going on at Altio - which I'm surprised we manage to do seeing how busy we are.

So will December be quiet for me, nope. That's going to be my first big holiday for a long time, several weeks in Australia, I can't wait. Although, I am a little concerned about getting prepared, will I have time to even pack my bags?

Technology Extremist? - Google just released Chrome their new browser, and you need Java 6 update 10RC1 or later to use the Java Plug-in. This leads me to thinking about if I am some kind of technical extremist. Chrome gets released on Windows only, why not the Mac or Linux? Rhetorical question really - Windows dominates the desktop computer marketplace, so it makes it the best thing for testing new products. Except Microsoft uses the Apple Mac to test new ideas for Microsoft Office, which is why I like Microsoft Office on the Mac so much - so there's my other Technology Extremism, I like using an Apple Mac at home. The final extreme technology, I still believe in Java Applets for doing complex stuff in the Web Browser. This is why I why I sometimes get frustrated when people say Altio is great but why use Java, that's a different rant for another time.

Well my opinion for a long time is that Google is just Microsoft in disguise - it's out to dominate the world, well the web part of it at least. So should I bow to the masses and adopt JavaScript as the way forward for writing rich web based applications? Should I bow to the masses and go Microsoft and use .Net, after all lot's of people keep saying Java is dead (http://www.theserverside.com/news/thread.tss?thread_id=39066, http://www.artima.com/weblogs/viewpost.jsp?thread=221903, http://java.sys-con.com/node/169595, http://www.oreillynet.com/onjava/blog/2007/01/java_to_the_iphone_can_you_hea.html). I'm sure someone will come up with the bright idea of putting type safety into JavaScript, oh hold on won't that make it Java :-). Anyway, these are all my opinions, and at the moment I see no reason to listen to the news from as far back as 2006 that Java is dead. Sun Microsystems has at least sat up and realised there is still a long way to go and is re-invigorating the Java Swing teams, first step JavaFX.

As far as technology choice goes, you should ensure you have available in your toolkit a number of tools and the knowledge of how they should be used, that way you have a better chance of delivering the most appropriate solutions - on time, within cost and meeting the requirements.

Monday, 4 August 2008

Altio support for composite applications

In recent blog entries I have used the term mashup to describe building applications from reusable components, reading recent blogs and press articles I should have used the term composite application. Back in June 2007 I read the specification for Service Component Architecture and discussed this with John Young who was at the time the CTO at Mantas Inc. John had ideas for enhancing the Altio architecture by making it more modular, beyond the use of linked applications in AltioLive 4.x. Reusability is something that I strongly support, but at the time could not see a fully working solution being implemented in AltioLive 4.x. Re-engineering of the AltioLive user interface to use Java Swing has made it possible to enable functionality to create reusable components.

Composite Applications

SOA is effectively a composition of services supporting business functionality. The missing functionality from most descriptions of composite application architecture is the user interface.

It is my belief that the only way to achieve true reuse of functionality within the enterprise is to consider vertical slices from the user interface to the data, identifying the reusable components within different verticals. This will enable business focused, reusable composite components that should be easy to test and implement. This in turn will mean the enterprise can achieve rapid turn around of business solutions to a high quality standard.

Attributes of composite application framework

In a number of recent discussions and news articles it appears that there is a resurgence of the term composite applications, Microsoft appears to be particularly active in this area with some very good articles on what a composite application framework should deliver, a summary of which is:

Alignment

Composition must promote alignment among key stakeholders. This could be internal alignment (align different groups within the enterprise) or external alignment (align with suppliers, customers, and partners).

- Solution-provider perspective: Solutions should align existing IT assets with the needs of business owners.

- Solution-consumer perspective: It should be easy to assemble composite applications that align groups within a company, and also across organizational boundaries. Frequently, this will require applications that support cross-functional processes and enable collaboration.

Adaptability

Composition must reduce time-to-response for the business when markets change.

- Solution-provider perspective: The platform for composition should provide adaptive range. This means that it should be easy to externalize points of variability within the solution, so that those artifacts can be changed easily without ripple effects across the rest of the solution. For example, this might mean changing business rules, user interfaces, and business processes.

- Solution-consumer perspective: It should be easy to reconfigure composite applications when the business must respond to changing market conditions.

Agility

Composition should reduce the time-to-benefit of enterprise applications by reducing the time-to-deployment of end-to-end solutions, reducing development and deployment costs, and leveraging best practices. Also, when the business must respond to changes quickly or handle external disruptions smoothly, it should be easy to modify composite applications to accomplish this.

- Solution-provider perspective: Assets that make up a composite application should leverage established industry standards that make the solution quick to build and easy to assemble together.

- Solution-consumer perspective: A deployed enterprise solution should enable quick decision making by business decision makers through real-time data flows and business-intelligence tools in the context of the automated process

The full Microsoft article can be found at http://msdn.microsoft.com/en-us/library/bb220803.aspx.

For me, the grand vision of composite applications in the enterprise would be for development teams to create products that aligns with business requirements. This would be achieved using a framework that enables the attributes of adaptability and agility described above. The products would contain artifacts for servicing data requests and user interface artifacts that understand how to interact with the data requests.

In business terms there would be products or composite components for CRM, Billing, HR etc. Each of these composite components would be loosely coupled to the underlying technology supporting the business process to enable rapid change in technology requirements to support changing business demands. Development teams would be responsible for creating the components, but solution teams would be responsible for putting together the final application using the appropriate composite components.

Altio architecture and AltioLive as a composite application framework.

The introduction of Composite Service Requests to the Altio architecture in AltioLive 5 and the ability to create modules for related groups of services was the first intentional step towards making the Altio architecture a framework for composite applications. AltioLive 5.2 will see the introduction of composite components that will allow user interface functionality to be packaged as a widget for re-use across many applications.

It was never an intentional path for the Altio architecture to deliver a support framework for composite applications, the roadmap has arisen from business demand. This makes the Altio architecture and AltioLive 5.2 product extremely powerful tools for implementing agile solutions which are adaptable and aligned with business requirements.

I have yet to decide if there is a demand for Altio to provide the ability to interact with OSOA definitions, the first steps will be to provide better integration with XSD's.

Conclusion

It is my opinion that the days of implementing tightly coupled user interfaces is nearing an end. Composite components make the following quality attributes - portability, maintainability and usability far easier to achieve, whereas a user interface that is tightly bound to a specific solution will normally take longer to implement and typically requires far more work to modify. Although, it often takes the creation of a specific user interface solution to identify the creation of reusable components. It is then important that a tool is used that allows components to be created from the different parts of the specific user interface.

Bibliography

OSOA, Service Component Architecture http://www.osoa.org/display/Main/Service+Component+Architecture+Specifications

Micorosoft, Composite Applications, http://msdn.microsoft.com/en-us/library/bb220803.aspx

Wikipedia, Service Component Architecture http://en.wikipedia.org/wiki/Service_component_architecture

EDS, Composite Applications and Portals http://www.eds.com/services/compositeappsandportal/

Bill Burnham Blogs, Software's Top 10 2005 Trends: #8 Composite Applications http://billburnham.blogs.com/burnhamsbeat/2005/03/softwares_top_1_1.html

(This article was originally posted to http://www.altio.com )

Sunday, 29 June 2008

AltioLive enabling the enterprise mashup

The AltioLive product provides both server side and client side architecture. This enables a secure load balanced mechanism to deliver data to and from a client. The client can be a user interface or another system.

What does this have to do with AltioLive? It has been the belief at Altio for a long time that a mashup does not have to be visual, but can be associated with data as well. For effective delivery of business solutions a product needs to be able to deliver data from multiple originating sources and the user should not need to know or understand the source of the data. AltioLive provides composite components to create visual business solutions and on the server side aggregated data feeds, this provides an effective mechanism to deliver browser based applications that interact with data from multiple sources.

A recent article in SD Times by David Linthicum provides a good overview of this, with the visual mashup described as

"the ability to change the manner in which a visual interface behaves by mashing it up with other content or services"

(Software Development Times, June 15 2008, page 37)

The article describes a non-visual mashup as

"the mashing up of two or more services to create a combined application, or integration point to service a business process". (Software Development Times, June 15 2008, page 37)

AltioLive composite controls enable a designer to take several existing components and package them together as a new business component or enterprise mashup widget. This means a library of re-usable components can soon grow and result in reduced development time for future solutions.

The AltioLive aggregated data feed provides a simple yet powerful workflow for retrieving data from disparate data sources. It is possible to use data attributes from one source as a parameter for the retrieval of data from another data source, execute multiple request in parallel or sequentially and stop execution if a failure occurs. This is the non-visual mashup.

Whatever you want to call this approach the ultimate aim is to deliver an application that makes the end user more effective in delivering business benefit as quickly as possible, without losing sight of maintainability.

The key factors of SOA/enterprise mashups as described in the article are:

- the ability to place volatility into a single domain, thus allowing for changes and for agility

- The ability to leverage services, both for information and behavior.

- The ability to bind together many back-end systems, making new and innovative uses of the systems.

This is something Altio continues to deliver through the AltioLive product as innovative ideas.

(This article was originally posted to http://www.altio.com )

Monday, 2 June 2008

RIA + SOA – The AltioLive Solution

I finally managed to get around to reading magazines I picked up at JavaOne and one article stands out for me. SOA World Magazine, June 2008 (http://soa.sys-con.com/read/513263_2.htm) contains an article called "RIA + SOA : The Next Episode, Building next-generation web platform" which describes how RIA and SOA are shifting web applications architecture back to Client Server from Model View Controller (MVC). So I've decided to pick up on this article and analyse it in the context of AltioLive which uses a far simpler implementation methodology – basically all the hard work is done for you.

Complimenting Technologies

I believe Rich Internet Application (RIA) frameworks provide a complimentary technology solution to enabling the user interface for Service Oriented Architecture (SOA). To quote the SOA World Magazine Article

in the standards-based world of HTML, CSS, and JavaScript, RIA developers have to assemble multiple third-party librariesto build rich user interfaces. AltioLive provides a single framework to enable the rapid development of rich user interfaces and provides a loosely coupled server side framework to make use of SOA and legacy data sources. This enables an Agile development approach to be used to deliver a user front end that can be adapted quickly to meet user requirements.

Poaching the headers from SOA World Magazine

This section takes the headers from the article and replaces the list of possible HTML, CSS and JavaScript options with the simple AltioLive framework implementation. In my opinion having a choice of different frameworks and custom javascript is a maintenance nightmare and is something AltioLive architecture tries to avoid.

- Design the "look" of the application.

For a complex solution requiring branding then a graphic designer can create and define an AltioLive skin. Skins provide the look and feel of borders, scroll bars etc. no need to understand HTML, CSS. For business developers who just want to get on and provide end users with a working system this saves a lot of effort without the need to think about colour codes and styles etc. The design of the user interface is a simple case of dragging and dropping widgets, setting properties and binding them to data. - Integrate Widgets.

If a component is not defined as a standard control or business widget (AltioLive Composite Control) then a Java developer can create a new Swing or JavaFX widget by extending the base AltioLive custom control or extending existing controls. - Add dynamic behaviour to the user interface

see next section - Consume Services

No need to do anything in AltioLive, dynamic user interface interaction with the server comes for free. Plus with AltioLive you get context based routing, ability to poll database tables or HTML service to simulate real time updates to the client. If the infrastructure is available then simply subscribe to a message queue using JMS service requests.

Not to forget the ultimate aim of connecting to a SOA web service – then just use the Web Service Wizard to create a SOAP service request. No complicated API's to understand and no code to implement, all the business logic is either in the web service or the SQL statement. Validation and data manipulation can all be done in the AltioLive user interface if necessary. - Create Services

If the server side business logic does not exist then some hard work will be required. Web Services or alternative business services will need to be written, if a short term solution is needed then it may be possible to use AltioLive JDBC Service Requests to retrieve and insert data while putting logic into the user interface.

The article goes on to discuss the need to "Provide an Open Widget Framework", I feel that this is possibly the most important point. The industry needs to have standards that enable Java Applets, Microsoft Silverlight, Macromedia Flash and AJAX widgets to communicate effectively so that a widget that meets the user's needs can be selected regardless of the underlying technology. The key to enabling this standard will probably be JavaScript as it is not tightly coupled to any single vendor solution.

Provide an Integrated RIA Programming Model. This integrated model exists if you use AltioLive. The primary focus for AltioLive is on creating a user interface as simply, quickly and cheaply as possible – through drag and drop and setting properties. The source of the user interface is stored in a plain text file as XML and so can be manually edited if there is a real desire to do so. The drawback of this is that developers can't get their hands as dirty as they may like to. If, as a developer, you really want to get into the depths of the code then a developer can write their own widgets, or focus upon the business logic at the server side. I believe Todd Fast is right when he made the statement "Applications for the masses by the masses: why engineers are an endangered species" (http://www.regdeveloper.co.uk/2008/05/09/developers_endangered_species/ ), the user interface should be about making it easy for the user. Although, I believe there will remain the need for software engineers but their focus should be on providing frameworks and the enablers for the masses to easily create applications.

Provide an Integrated Services Platform. Again AltioLive meets the need to have the following attributes:

- Support for creating services in any programming language

- Seamless interoperability between the RIA and SOA tiers

- Ability to consume local mock services

The AltioLive Presentation Server provides the mechanism to interact with server side components and allows for JMS, JDBC, SOAP, HTTP connections. This means it is possible to make use of direct SQL connections or to write a web service using .Net and call it through the SOAP or HTTP request. While AltioLive is primarily a Java technology it's loosely coupled framework doesn't make Java based services mandatory.

As mentioned through this document AltioLive provides a simple approach to create a service request using one technology and allows migration to another with little or no impact upon the overall application architecture, thus providing seamless interoperability between the RIA and SOA tiers.

Mock services have been a part of AltioLive since its inception. The AltioLive IDE allows a service request to be created with example response data built in, more complex solutions can be created using Composite Service Requests so that different responses can be provided based upon input values. When testing the user interface it may be preferable to replace heavy weight services with a XML file that contains the expected response and then use a HTTP service request to retrieve the data from the XML file. The HTTP service request can simply be replaced with the request to the fully implemented business logic later in the implementation.

Conclusion

The business challenge of moving to SOA

I have yet to work with an organisation that has implemented a SOA architecture from scratch as a green field project, in my experience there has always been legacy integration required to deliver the final solution.

AltioLive overcomes this by allowing the implementation team to expose AltioLive Service Requests. An AltioLive Service Request exposes the same interface regardless of the underlying technology that the data is being retrieved from or written to. This means that an implementation team can initially create a JDBC connection direct to a database and then when a full SOA architecture exposing web-services is in place then the service request can be changed to a Web Service request without any changes to the user interface. This approach enables the rapid development of the user interface required to meet business needs and then a more formal approach can be used to deliver a well defined and structured SOA.

And what about the Enterprise Service Bus (ESB)?

Well using AltioLive the move to a ESB is achieved by modifying the AltioLive server side request to use message queues. If the solution implemented using AltioLive has been well designed then there will be no changes to the XML structure passed to or from the user interface, and now a system will be able to push updates from the message queue to the user interface without any major system changes to the presentation layer.

Thursday, 22 May 2008

Fire people who think they're entitled to run things

Disruptive Influence

Having had time to think about the question I now believe there are stages before reaching the formal dismissal process. I feel the reason for getting to the point of firing someone should only be based upon the team and organisation as a whole. If the team can't get on with them, or the person(s) is disruptive to the success of a project or organisation. This is summarised really well in an article by Ben Leichtling "Fire people who think they're entitled to run things". A manager or senior professional in an organisation has a responsibility to deliver projects to a high standard, if someone makes that hard to achieve then there is a serious problem. The disruptive influence of some team members can make it really hard for a manager to deliver a successful project, or to feel in control of the project.Personally I would try to work with the person by understanding what motivates them and explaining why it is important to work as a team and within the guidelines set out by the organisation. There is also the case that they may have valid arguments for doing things differently and are not communicating them effectively. In the end someone has to be in charge and people have to accept authority so if all else fails you have to embark upon the path of dismissal.

Luckily, I've never been in the position of working with really obstructive people and where issues have occurred it has been possible to resolve them through compromise or if necessary through being assertive. Although occasionally I'd love to say "Look if you don't like it then find a new job", but that is hardly professional. I'd prefer to maintain the moral high ground.

Constructive Influence

There is an alternative to the disruptive influence and I have used this successfully with like minded colleagues. This alternative is to use constructive and critical analysis. Putting a lot of clever and experienced IT people into a room can lead to a conflict of ego's. Each person is bound to have an opinion about how to design a solution or deliver a project, and may think theirs is the best solution. The challenge is to make use of all of the different ideas to perform critical analysis. Somone coming into a design meeting using critical analysis after it has started may believe the team is in conflict and not achieving anything because of some of the heated debates that can take place.Critical analysis will only work well if everyone understands it is taking place and can be professional in accepting other peoples views and constructively analysing them. Eventually the best parts of each idea will start to come together until it is possible to arrive at a solution using the best of the ideas.

The key to success is that someone has to be in charge and have the authority to make the hard decision of intervening at the right moment to influence the design, and to stop the process when it is taking too long or the best possible solution in the time available has been delivered.

Team Success

I don't claim to be an expert on the psychology of teams but I do have a lot of experience of managing very capable IT teams. From my experience I can conclude team success can only be achieved if everyone works together and accepts that there is a structure. Sometimes managers have to earn respect and in the worst case they have to take firm action to ensure success.The bottom line is that a manager can "fire" someone working for them, it never works the other way around. So even if a team member thinks the manager is wrong, it is down to a professional team member to influence the senior person so that the correct approach is used.

Friday, 9 May 2008

JavaOne2008. Thursday roundup

It seems like a long build up to the release of JavaFX and the new browser plugin and I can't wait for it to happen. As Jim mentions in his summary of day 3 I think there will now be a concern amongst AJAX followers that the technology is going to look dated. At the moment AJAX front ends that want to be really rich seem to rely too much upon Flash, look at Google Analytics - all the charts are Flash.

Even today AltioLive's Graph control that will be released in Altio 5.2 shows the power of Applet based technologies over JavaScript. There are several JavaScript based graph widgets but they tend to be slow when handling lots of data.

I strongly believe that Java 6 has again made Java Applets an option for consumer Rich Web Applications and can come out from hiding in the enterprise solution area, and JavaFX further strengthens the position of Java Applets as a solution.

I'm sure that if you talk to the Java SE team they will strongly disagree with the idea of JavaFX being "stillborn". Healthy competition between SilverLight, Flash, Applets and AJAX is required to provide end users with the best user experience possible.

If you want to see a real FilthyRich Web Application look at the AltioLive IDE, as Jim put's it we "Eat our own dog food" I don't believe a product can trully say it is good unless you can create an IDE using the underlying framework. I like Eclipse but there must be something wrong if AJAX and Flash don't have IDE's using their own technology. NOTE: there are some AJAX IDE's out there.

Thursday, 8 May 2008

Can all developers juggle

The reason why I ask is that we were giving away juggling balls at JavaOne2008 and I'm amazed at how many people at JavaOne can juggle, the best I saw was 6 balls. What I really want to know is if there is a correlation with how good a software engineer is and their ability to juggle. I'm not going to do a survey on this but if someone discovers the answer and it happens to be yes then all interviews I do in future will require:

- Presentation

- Interview

- Juggling exercise

JavaOne2008. A day for applets and reporting

We met a number of Sun Microsystems staff today, some we expected to meet and other by chance. I asked Ethan Nicholas who works on the Java JVM kernel if it was possible to get two applets to communicate with each other without using JavaScript and guess what, it may not be a simple task (the question was asked during our talk yesterday).

Jim's thoughts for versions of Java earlier than 1.6u10 would be to use static variables/classes, the problem with this approach is that both applets need to be aware of each other and would be tightly coupled, not Web 2.0 at all. At least this would have worked though.

With the delivery of Java 1.6u10 it's going to be even harder for Applets to communicate in a browser as each Applet will run in its own virtual machine and so globally available static classes are not an option, so it's back to JavaScript - on the plus side Ethan appears keen on the idea of making this happen. I think a solution is needed if Java Applets are to be used on the desktop as demonstrated in one of the key note talks. The standard for desktop applications communicating has been set by Microsoft and so Java will need an equivalent communication mechanism for desktop applications to really succeed, and it needs to ensure applications are loosely coupled.

I regret not seeing the talk on JavaFX where dropping widgets onto the desktop was shown and it seems that it was not made clear during the talk that this ability to drag an applet to the desktop was a plugin feature and not JavaFX. Jim, Tom and I had to explain this several times to people visiting the booth.

I'm going to try and spend the day tomorrow looking at all the different reporting tools, there's a great fit between RIA and reporting, and there are lots of possible products to choose from it's just identify how easily AltioLive could integrate with different reporting products.

Wednesday, 7 May 2008

JavaOne2008. Java applets still have the power to draw a crowd

Conclusion for Tuesday 6 May - Java 6 update 10 provides a whole new opportunity for Java Applet technologies to compete on a level playing field with Adobe Flex, Microsoft Silverlight. The combination of improved download options, faster JVM load times combined with JavaFX rich graphics will enable the AltioLive product to focus upon adding value to the Java Development community rather than overcoming problems with the Jave SE plugin technology. 2008 and 2009 provide many opportunities for Java Applet technologies and I feel confident enough to say that Applets are NOT a "old school" technology, and are here to stay for the forseable future.

Loss of focus by Sun Microsystems and/or the Java product team is the only concern. If enough Java developers push for improved performance and a simpler user interface API's Sun will need to meet the demands. It's about working as a "community", considering all the options and not thinking Adobe and Microsoft offer the only solution to rich user interfaces in a web browser.

Enough of my soap box rant and a quick review of the day.

The booth had a steady flow of people interested in both the freebies and our product. The interest in a product was really great to see making all the hard work to improve the AltioLive product worth while, and the java development community still has interest in Java Applets technology.

Jim and I guess about 80-100 came to the presentation, and given that the were a number of very big talks by popular speakers I was pleased. Aside from not feeling too good - a serious lack of sleep, the talk went really well, with a few lessons learnt should we do a talk again next year.

The question that catches you out! - "How do you get two applets to communicate in the same browser session?", Jim put a lot of effort into his examples and neither of us spotted the fact that we didn't do an example of Java Applets talking to other Java Applets, is direct communication possible and more importantly should the security model allow it to happen. Not something I plan to answer in this blog.

Jim gets his 10 minutes of fame- a surprise visit by the JavaOne2008 reporter caught us out and Jim had to do a 10 minute technical interview about applets and AltioLive - good interview Jim especially when it was at the end of a very long day.

Tuesday, 6 May 2008

JavaOne2008. Booth ready to go

San Francisco day 1.

Our first full day at JavaOne 2008, and I think all of us are very tired - it took us nearly all day to prepare the booth, demo's and practice the talk that Jim and I will do tomorrow.

The day started at 6am this morning with a vigorous walk up some very steep hills, I'm amazed the houses don't just slide down the hills.

Tom has put together some good videos of AltioLive designer and applications that we will play back through the next few days. Even before they were complete people were stopping and looking at what was happening.

Tom and I decided that the Community One speed dating event just wasn't for us. Wives and girlfriends should not worry, it was all to do with meeting up with other organisations and discussing how you could benefit each other - we were just too tired to meet 21 companies in 45 minutes.

Everything has gone to plan so far at the event. Although, the BIG worry is the Altio website, we have had a few issues with the website and hope they will be fixed by tomorrow. If you have difficulty accessing www.altio.com, try the alternative http://altio.com , http://www.altio.co.uk. Our service provider is working hard to investigate the problem and we hope to have this resolved soon.

For those of you attending JavaOne2008 and who read my posts I look forward to meeting you.

Friday, 2 May 2008

New AltioLive website goes live

The new AltioLive website is now up and running, just in time for JavaOne!

All going well AltioLive 5.2 will be available for download as a Beta release very soon.

April was busy and May is even busier.

Go have a look at the new site at www.altio.com

Wednesday, 30 April 2008

New acronyms for the IT industry

I first saw WOA mentioned in 2006 on a zdnet blog titled called "The SOA with reach: Web-Oriented Architecture" it was posted on the 1st April so I had to take a deep breath and make sure it wasn't a joke. My point is that WOA is just an extension of SOA, and isn't something newly invented, in my opinion it is just the natural progression - and shows how the IT industry can adapt to business or user demands, or just to prove that something new can be invented. Unlike technology specific trends like Java, Groovy, JRuby etc which are created to improve the original technology they either replace or are based upon SOA and WOA acronyms are labels for theories and practices. The benefit of these labels is that they provide a focus for identifying the key requirements that make up the theory, and let people in the industry categorize and know what is being discussed.

So why has it been so difficult to identify the requirements for labelling a Web 2.0 product/solution or component (WIKI entries seem to debate what Web 2.0 is, rather than provide a specific definition) . I believe it is because Web 2.0 is a social phenomenon and has been driven by business or user needs to have information in a easily accessible format and to be able to configure that data to meet their own needs. To enable this requires rich user interfaces provided through Rich Internet Applications (RIA).

More acronyms evolved from SOA:

- User Oriented Architecture (UOA), not sure this exists yet. If it did there would be a close alignment with Business Oriented Architecture. The basics are that users would have maximum flexibility to work with UI widgets and data objects to generate screens how they want them. This could be viewed as an extension to Business Intelligence (BI).

- Business Oriented Architecture (BOA) makes use of BPM and SOA to provide flexible and scalable systems which will enable an organisation to adapt quickly to a changing market place. I believe the driver here is senior executives and the IT department trying to meet business requirements.

Of the two I believe User Oriented Architecture is the most powerful. It can provide great benefits through empowering end users to access data on demand. It could also cause a lot of damage to a business by users manipulating data in an incorrect manner either intentionally or unintentionally resulting in incorrect decisions being made.

Is Web 2.0 just User Oriented Architecture or will Web 3.0 provide this? I believe the answer is no, if User Oriented Architecture were ever to become an adopted term I feel there would be a demand for highly configurable and yet very simple user tools (NOTE - I'm not talking developer IDE's here). These tools would initially be aimed at power users but would eventually reach into the mass market, and would not require in-depth code or development experience, the first steps are mashups or Web 2.0 components and tools such as Yahoo Pipes.

In my opinion the Enterprise is lagging behind in adopting technologies for end users, and in some cases for good reasons. There needs to be some control over how data is accessed and manipulated otherwise there is the risk of not knowing if data is "a fact" and what data has evolved from a mashup of facts to deliver what people want to hear.

Anyway back to where I started Object Oriented Design and Programming are very powerful but if you keep extending the original concept(object), things just end up becoming overcomplicated and over used so diluting the original purpose. Do we need to re-factor some of the terms used in the IT industry to make things simple and more maintainable?

References

- http://www.webware.com/8301-1_109-9923360-2.html?tag=blogFeed

- http://www.gartner.com/DisplayDocument?doc_cd=114358

- http://blogs.zdnet.com/Hinchcliffe/

- http://www.bptrends.com/publicationfiles/TWO%2003-07-ART-ABusinessOrientedArchitecture-Gilbert-Final.pdf

- http://pipes.yahoo.com/pipes/

- http://en.wikipedia.org/wiki/Web_2.0

- http://en.wikipedia.org/wiki/Web_3

Saturday, 26 April 2008

Where are all the applets?

A recent posting on Jim's blog "Eat your own dog food" mentions www.upnext.com a cool Applet that provides a 3D view of Manhatten (usefull for SIFMA if you're going in June), I posted a comment on Jim's blog but I also wanted to make my own posting.

I'm now wondering how many other Web 2.0 Applets there are out there - upnext is one I will try to mention in my talk at JavaOne 2008.

I have nothing against AJAX, Flash or Silverlight, but I do believe that Applets are being unfairly treated and I'm surprised Sun doesn't have a library of Applet based Web 2.0 sites, or a library of Applet based products (maybe they do and I've been too lazy to find it).

So my pet project for the next few months will be to find more great Applet products....

Go see Altio at JavaOne 2008.

Tuesday, 22 April 2008

AltioLive Google Social API Demo

The only comment I have is that blogger blog's seem to be difficult to analyse. IMHO this is strange because Google Social API and Blogger are both Google products. So if you enter http://thompson-web.blogspot.com/ not a lot happens but if you enter http://www.heychinaski.com/blog/ it does just what's expected and finds a social network. I suppose it could be argued I have no social life which is why no network appears for me, :-)

NOTE: The tinyurl mentioned above may no longer point to the demo in the future as he demo may only be available through the Altio website

Thursday, 17 April 2008

Project estimation (duration, effort) and Project Failure

Several times recently I've been involved in discussions about project estimation, sometimes with project managers and other times in general conversation about project failure. Here is my opinion on why both duration and effort are important in estimation and neither can be ignored. This my personal opinion and every project and organisation may differ and should be treated appropriately by using the correct project management and software design methods.

Background on Project Failure

The UK National Audit Office summarises the common causes of project failure as:

NAO/OGC Common causes of project failure

1. Lack of clear link between the project and the organisation's key strategic priorities, including agreed measures of success.

2. Lack of clear senior management and ministerial ownership and leadership.

3. Lack of effective engagement with stakeholders.

4. Lack of skills and proven approach to project management and risk management.

5. Lack of understanding of and contact with the supply industry at senior levels in the organisation.

6. Evaluation of proposals driven by initial price rather than long term value for money (especially securing delivery of business benefits).

7. Too little attention to breaking development and implementation into manageable steps.

8. Inadequate resources and skills to deliver the total delivery portfolio.

I define project failure as one that either goes over budget, over schedule or both, or fails to deliver what the stakeholders actually expected. Most media attention focuses upon costs and timescale, and let's face it project failure is not isolated to IT projects - Wembly Stadium, 2012 Olympic Bid, the Millennium Dome were not IT projects. Until recently Heathrow Terminal 5 was hyped as the way to run projects (Agile), yes it may have been on time and on budget but in the end it failed to meet stakeholder expectations – the users of the terminal were far from happy and executives lost their jobs.

The importance of duration and effort in estimation

When I ask for estimates I always ask for two numbers the duration and effort – just so that it is clear to me how much the work is costing and how long it will take to deliver. When it's an external contractor I'm interested in duration and the bottom line cost not the effort, so this note applies to internal projects.

Effort is the direct cost of running the project and I would expect a project manager to be able to break down the estimate into deliverables/artifacts/tasks/function points – for me they are all the same, a quantifiable item of work. The quantifiable item of work can be listed in a Scrum burn-down list or an item in a project plan, but the project plan and burn-down need to take into account duration, more on this later. This effort provides the basis for future estimation for performing the same or similar piece of work. Using a well managed timesheet system enables project managers to make better estimates for future projects based upon projects of similar size, complexity, and industry type (OK it's not quite that simple but I'm not writing a thesis here).

The effort estimate will be affected by a number of factors e.g. sick, holiday, training, going to meetings not related to the project. All the daily tasks that an employee will be expected to undertake. This is what makes up the duration estimate of the project – the bottom line is "how productive is a person each day in your organisation". So if the effort to complete a project is 100 man days but an employee can only spend 80% of their time doing productive work then the duration is 120 125 days to deliver the project.

(UPDATE - Oops. Basic math error, should be 125 days.)

Estimating duration and effort means that a project can meet schedule and costs, but accurate estimation is only possible with historic data – which is why using accurate timesheet systems is needed.

Most people in software hate completing timesheets. I know this because I did when I used to cut code – it's an unnecessary distraction and stops you getting on with doing fun things like designing and writing software.

If there is to be any professionalism in software engineering then developers and testers etc need to understand the importance of estimation. The problem is that every time a developer enters 8 hours development time when they really worked 12 just sets false expectations for the project manager and stakeholders. The next time a project is estimated the project manager looks at the timesheets and thinks "if I pay for 2 hours overtime I can get more from my team" and the team end up working 14+ hour days. OK, nobody wants this and I firmly believe in the Agile 8 hour days – even if I don't apply what I preach, but the responsibility lies with everyone on a project to ensure effective project estimation.

Applying the appropriate estimation

At Altio PRINCE and Agile (Scrum) techniques are used to deliver projects. PRINCE provides the control and communication, the use of burn-down charts and daily meetings ensure project duration and deliverables are constantly monitored.

Effort estimates are used to calculate cost and this is where it is important that staff book their time accurately otherwise a project can fail on cost because staff spent most of their time on work that was not project related and so should have booked their time accurately (and I do draw the line at having a "Rest Room" or "Cigarette Break" task). For Altio projects we use several estimation technique – the simplest being a spreadsheet that applies triangulation estimation using best, most likely and worst case scenarios.

A project manager then takes the estimated effort and populates a project plan with tasks and adjusts staff availability to get a duration.

To monitor a project in progress then duration is important and using burn-down charts with staff providing daily estimates of how long it will take to deliver being the key. This estimate by the team members is pure duration, if the person is only managing to work 2 hours a day and there is 10 hours of work left, then the duration is 5 days. It's down to the project manager to manage why the person is only doing 2 hours a day and to manage the risks that this dilution of work effort causes.

Constantly changing estimates

It is important to constantly review project in progress and adjust estimates based upon knowledge from previous projects and deliveries. Using PRINCE gateways as the time to re-estimate is important, as it is the time to provide the details to the stakeholders for them to make decisions.

Conclusion

There are lots of debates online about project estimation and ultimately every project will be different because of the people working on it, the technology being used and the expectations of the stakeholders.

Software projects are all about developing new and innovative systems otherwise we would just buy the most appropriate product off the shelf. This means there is no blue print for accurate software estimation – software engineers are not laying bricks to build a house so there is no way to say how many bricks per hour a person can lay and apply that to all projects (the analogy being lines of code = bricks).

REFERENCES

Listed below are a number of useful links and documents that I use for reference.

- http://www.itprojectestimation.com/estrefs.htm

- The Holy Grail of project management success, http://www.bcs.org/server.php?show=ConWebDoc.8418 accessed March 2007

- Wembley Stadium Project Management, http://www.bcs.org/server.php?show=ConWebDoc.3587, accessed March 2007

- Olympic bid estimates, http://www.telegraph.co.uk/sport/main.jhtml?xml=/sport/2007/02/08/solond08.xml, accessed March 2007

- UK Government PostNote on NHS Project Failure, http://www.parliament.uk/documents/upload/POSTpn214.pdf, access March 2007

- UK Government Post Note on IT Project Failures, http://www.parliament.uk/post/pn200.pdf, accessed March 2007

- Project Failure down to lack of quality, http://www.bcs.org/server.php?show=ConWebDoc.9875 , accessed March 2007

- Steve McConnell, Rapid Development, Microsoft Press,1996

- Martyn Ould, Managing Software Quality and Business Risk, Wiley,1999

- Art, Science and Software Engineering, http://www.construx.com/Page.aspx?hid=1202 , Accessed October 2006

- Simple and sophisticated is the recipe for Marks' success, Project Manager Today, page 4, March 2007

- Ian Sommerville, Software Engineering 8th Edition, Addison Wesley, 2007

- Barbara C. McNurlin & Ralph H. Sprague, Information Systems Management in Practice 7th Edition, Pearson, 2004

- Overview of Prince 2, http://www.ogc.gov.uk/methods_prince_2.asp, Accessed November 2006

- The New Methodology, http://www.martinfowler.com/articles/newMethodology.html, Accessed October 2006

- The Register – IT Project Failure is Rampant http://www.theregister.co.uk/2002/11/26/it_project_failure_is_rampant/, accessed October 2006

- Computing Magazine – Buck Passing Route of Project Downtime http://www.computing.co.uk/itweek/news/2183855/buck-passing-root-downtime, accessed February 2007

- National Audit Office – Delivering Successful IT Projects http://www.nao.org.uk/publications/nao_reports/06-07/060733es.htm, accessed March 2007

- Successful IT: Modernising Government in Action, UK Cabinet Office, page 21

- Project success: the contribution of the project manager, Project Manager Today, page 10, March 2007

- Project success: success factors, Project Manager Today, page 14, February 2007

- Six Sigma Estimation http://software.isixsigma.com/library/content/c030514a.asp , accessed October 2006

- Analogy estimation http://www-128.ibm.com/developerworks/rational/library/4772.html, accessed January 2007

- Symons MKII Function Point Estimation http://www.measuresw.com/services/tools/fsm_mk2.html, accessed March 2007

- COCOMO estimation http://sunset.usc.edu/research/COCOMOII/ accessed January 2007

Wednesday, 9 April 2008

Using complex web services in Altio

Since 2003 AltioLive has had the ability to work with SOAP web services but we only have simple examples that use primitive variables. Recent professional service projects have required the use of Web Services which use complex input and output data types so I thought I would make some notes here before a more formal document is produced.

AltioLive was developed to make integration with SOA as simple as possible, but because of the broad set of functionality available in AltioLive it is not always obvious how a certain implement a specific solution. This note will highlight how to use AltioLive to work with SOAP messages and WSDL's.

What is a complex web service?

When I mention a complex web service I mean one that takes complex XML as input and returns complex XML. This is predominant now in the Enterprise where .Net and JAX-WS object serialization makes it easy to transform objects to and from XML, resulting in deeply nested XML hierarchies.

Listed below is one approach to using a complex web service in AltioLive, the default approach is to use controls mapped to parameters in the service request - this is the default for AltioLive.

- Generate a template of the XML structure for use by Altio Screens. The template XML can be generated using Static Data, AltioDB, or a request to a template object.

- Map screen objects to the XML template

- Submit the XML to a SOAP service request.

- Map the response data to the required location

The Template

There are several options for providing the template of the complex XML. The general principal is that the application requires the XML structure that is to be passed to the Web Service, and the controls will be mapped to this XML structure.

Probably the simplest solution is to create a XML file containing the template and use this in AltioDB or as a file on the server which can be called from a HTTP request. Then create a HTTP service request to retrieve the template XML.

NOTE: Make sure the data keys are correct, as this will be a common reason why the data does not display correctly. This will be especially important for the response data.

The SOAP request

Using the WSDL wizard is probably the simplest way of creating the required SOAP request:

- Open AltioLiveApplication Manager

- Select WSDL from the service request types on the menu

- Enter the URL of the WSDL that declares the SOAP service you which to use

- Click "Retrieve Operations"

- Select the "Operation" you want to use

- Click "Use Selected Operation"

These steps will create a new SOAP Service Request.

Because the service request will be using complex XML structure to send and receive data then the "Parts" section of the Service Request will need to be modified. By default AltioLive will create a placeholder for the data that will be passed from the client to the request, as shown below:

<tt:create xmlns:tt='http://www.bbb.com/reports/ReportInstanceAdmin'>

<tt:ParamData xmlns:tt='http://www.bbb.com/reports'>

<tt:Schedule>

<Recurrence>

<DailyRecurrence>${client.DAILYRECURRENCE}</DailyRecurrence>

<WeeklyRecurrence>${client.WEEKLYRECURRENCE}</WeeklyRecurrence>

<YearlyRecurrence>${client.YEARLYRECURRENCE}</YearlyRecurrence>

<MonthlyRecurrence>${client.MONTHLYRECURRENCE}</MonthlyRecurrence>

</Recurrence>

</tt:Schedule>

<Params>

<tt:InventoryParams>

<ReportTitle>${client.REPORTTITLE}</ReportTitle>

<TitleOpt>${client.TITLEOPT}</TitleOpt>

<ReportCode>${client.REPORTCODE}</ReportCode>

<Uid>${client.UID}</Uid>

</Params>

<ReportInstance>

${client.REPORTINSTANCE}</ReportInstance>

</tt:ParamData>

;</tt:create>

As the XML structure will be provided through a template the XML shown above can be replaced with:

<tt:create xmlns:tt='http://www.BBB.com/reports/ReportInstanceAdmin'>${client.PARAMS}:xml</tt:create>

The parameter reference ${client.PARAMS}:xml informs AltioLive to process the parameter as a encoded XML block and will de-encode the XML when the WebService operation is called.

Processing the response

By default Altio will generate the response template using ${response.result}, this works fine for simple WebServices but as the scenario is using complex XML then the response body is the important part of the SOAP envelope and so the syntax to retrieve the message content needs to be ${response.body}, otherwise Altio will report that no XML was provided in the message.

By default Altio will generate the response template using ${response.result}, this works fine for simple WebServices but as the scenario is using complex XML then the response body is the important part of the SOAP envelope and so the syntax to retrieve the message content needs to be ${response.body}, otherwise Altio will report that no XML was provided in the message.

To implement this change edit the SOAP Service Request. The "Response" tab contains a field called "Literal XML string", this defines the structure of the XML that the results of the SOAP request should be placed into. For this example use the default value but replace the ${response.result} value with ${response.body}. So that the "Literal XML string" looks like the following:

<DATA><SOAP><execute>${response.body}</execute></SOAP></DATA>

Client Action

The final part to using a complex web service is to implement the client logic. The first step is to retrieve the template XML, this is done through a simple request to the Service Request that will return the template XML. The second step is to implement the logic to pass the data to the SOAP Service Request that will execute the SOAP operation, the syntax of the parameter to be passed to the SOAP Service Request is shown below:

PARAMS='eval(escape(xml-string(/ReportParams/*)))'

- xml-string() – serializes the XML element into a XML string.

- escape() – performs a HTML escape of the XML string to ensure correct transmission from the client to the server.

- eval() – informs AltioLive that this is a function execution block and that the content needs processing.

The "Parameter source" property of the "Server request" action block needs to be set to STRING, otherwise AltioLive will expect to find a control called PARAMS and use the content of the control for the XML structure.

Aside from the service request execution you will need to map controls to the XML.

Conclusion

The use of a Template for working with a complex XML structure is an alternative approach to using controls to pass the required data as individual fields. The benefits of using a template is that it allows the XML block to be manipulated by many screens and allows the XML to be built up from multiple sources if necessary.

The negative aspect of using template XML might be in migrating to a different technology, and example would be moving from a SOAP message to a REST format where parameters would need to be passed rather than a complex XML structure. In my opinion if the request is very complex then a SOAP message is probably far more effective and manageable than a equivalent REST message.

The AltioLive online help provides further details of using WSDL's and SOAP Service Requests, and if you are working in a SOA environment it is worth familiarising yourself with different techniques for interacting with SOAP message.

Monday, 7 April 2008

Getting ready for Javaone 2008

Thursday, 3 April 2008

nHibernate - Filter and Criteria for applying dynamic where clause

The final solution makes use of Filters and SpringFramework, IMHO both tools that you cannot do without when working in Java or .Net.

Requirement

The system has complex WebServices exposed which provide data intensive processing based upon parameters provided in complex XML. The .Net framework deals with serialization of the XML into objects, the requirement is to apply filter conditions only when a parameter is supplied in the XML. It is essential that maximum reuse of code is maintained.

Options

- Write a data access object with lots of

ifstatements. The code would need to generate a string that forms thewhereclause of the select statement. - Use nHibernate ICriteria or IFilter objects to apply the conditions to the SQL.

Option 2 was the method of choice with little thought for option 1. It was felt that option 2 would provide a more modular approach. Now to the point of this blog entry, there are subtle differences between ICriteria and IFilter, and I would recommend using IFilter which I feel is much more powerful mechanism for controlling the data returned in the object model.

ICriteria produced cartesian joins so did not truly reflect the object model. For example if you have a Order object that can contain many OrderLines you expect the ORM to produce one Order object that contains many OrderLines. Using Criteria in nHibernate produced many Order's (1 per OrderLine) and each Order contained the correct number of OrderLines. The more Criteria applied to the query the bigger the result set became. Also, objects returned using Criteria that contain Bags, Sets or Maps had the collection object populated without applying any filtering.

It was at this point that I decided to focus my attention on the Filter functionality provided by nHibernate and it worked perfectly.

IFilter requires a little more effort in terms of code and producing the hibernate mapping documents but it is well worth the effort. The filter ensures that only the required objects are returned and that Bags, Maps, and Sets are correctly populated with filtered objects. A filter is applied to the session and the same filter defition can be used on many objects. Individual filters can be enabled or disabled as necessary.

Solution

The solution was to implement each filter condition in a object that determined if the filter should be applied. The filter class retrieved the parameter value originally passed in the XML and applies the filter condition, nHibernate dealt with correct SQL syntax for the

where clause. Each possible filter condition is put into its own class and the chained together in a IList object which is iterated over by a control class. The configuration of control class and filter classes is done in SpringFramework so as to provide maximum flexibility.

SpringFramework provides a flexible means to add or remove filters from a control class.

foreach (AbstractParamFilter paramFilter in commonFilterList)

{

paramFilter.ApplyFilter(accountingParams, session);

}

The filter classes implement either a Interface or Abstract class thus providing the polymorphism.

Each implemented filter class is implemented to decide if the filter should be enabled or disabled based upon the content of the object passed to the filter.

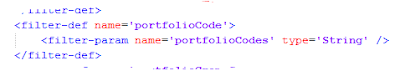

The NHibernate configuration needs to have a filter definition provided for properties or collection objects as shown below.

Finally a filter defintion and the parameter for the filter need to be defined in the hibernate mapping files. NHibernate chapter 14 provides the documented detail of what to do, I personally do not believe the chapter describes the full benefits.

NHibernate chapter 14 provides the documented detail of what to do, I personally do not believe the chapter describes the full benefits.

Wednesday, 26 March 2008

Why use AJAX in the enterprise?

Creating a Enterprise application for the browser requires the following at a minimum

- Responsive user interface

- Ability to work with large volumes of data

- Provide all the features expected from a desktop application, which probably means interacting with other desktop applications.

The computerworld article quotes:

" most AJAX frameworks tend to keep all real business logic on the server as opposed to local systems, user interactions may require a roundtrip communication between the browser and server for each input field"

"Some large applications could easily have 50 fields on a single screen."This statement seems to have caused quite a stir, resulting in a number of strongly worded comments. I would agree that 50 fields does seem like an extreme GUI and probably breaks a lot of usability and GUI design rules but I have seen a number of Call Centre application both in the UK and Far East which have a lot of complex screens with many input fields, including editable lists on one screen (maybe not 50). Sometimes the nature of the business domain requires this. So technical purists who maybe have little business knowledge probably needed to tame their comments a little. It is the business that drives IT demand, it is down to people working in technology to meet the business demands, not enforce technical constraints on business users.

Well why not consider Applet based technologies. Applets provide a rich user experience all within a browser, the best of both worlds. I was surprised that the report summary only discussed Adobe and Microsoft Technologies. This shows a lack of knowledge of what Sun are doing in Java 6 with the new Java plugin and Java FX."As a result, AJAX developers told Forrester that they had to reduce real-time input validation compared with traditional rich clients to meet performance requirements. Real time input validation is a top priority for power users, the report said."

Overall I feel a lot of Analysts and the developer community are ignoring Applets as a technology due to its poor history. I feel that Applets should be used in Rich Enterprise Web Applications, and Sun need to advertise the power of Applets with the new support of Java 6. There are enough Java developers and using a tool like Altio abstracts away the complexity of understanding Swing and AWT.

Tuesday, 18 March 2008

How to setup a HP C4380 with an Orange Livebox

The one thing you need to make sure you do is press the number "1" button above the USB port. This enables the discovery mode of the Livebox which adds the printer to the MAC address list. By default the Livebox stores registered equipments MAC address and so printers or laptops etc cannot connect without an entry in the MAC address list.

So if you setup the printer using the HP software, it will say that the installation was successfully but may not allow you to print. I resolved this by pressing the "1" button on the Livebox, turned off the C4380 and turned it back on and I could then print wirelessly as the printer could then get a IP address.

A new printer for home

My requirements were

- Cheap to run

- Capable of printing photos

- Worked remotely (bluetooth or WiFi)

- Would work with Apple Mac and Windows (XP and Vista)

My first mistake was to go out and buy something without researching (I was in a bit of a hurry, which is my excuse), normally I would research quite a bit before buying computer hardware.

I'd previously read several articles in photography magazines about all in one photo printers, and felt that was a good option as it would save desk space (scanner, printer, copier all rolled in one). So I bought a Kodak 5300 , my reasoning for this was that it was advertised as being 50% cheaper than all its competitors, great the kids can print and print without me breaking out in a cold sweat. I would need a bluetooth dongle to go wire free but I had one lying around somewhere so that shouldn't be a problem.

So I get home and begin the installation on my Apple Mac and the problems begin:

- Says compatible with OSX 10.4.8 and above - well I'm on 10.4.11 and the installation software flatly refused to accept that 10.4.11 is newer than 10.4.8. So off I go to the Kodak web site and download a newer installer, problem resolved. The software installs fine, and I can print using the USB port.

- Next problem, as with all new things I like to test them. So I try the scanner and it actually locks up my Apple Mac ( and I mean really locks it up, I had to turn the Mac off). Back to the Kodak site and download and install firmware. At this stage I'm wondering if I have made a mistake here.

- Third problem. I plug the bluetooth adapter in thinking surely no more problems. Oh how wrong I am. Nothing can see the printer, I even tried my mobile phone. So I go back to google to see what I can find out, and guess what the only bluetooth dongle supported is the Kodak one, which you can't buy from the shops and have to order (I wish it had been more specific on the box).

So I get a recommendation of a HP C4380 and I start the process all over again.

Install the software on a Mac using the USB port, prints and scans fine.

Disconnect the USB port and connect the printer to the Wirless Router, and try to print from the Apple Mac that worked but also I can scan images remotely as well (bit daft because I have to walk from one room to another to do it, but it's still a neat trick to impress the kids). I'm well impressed so far, well done HP for a superb product. Not so well done Kodak, I think you need to sort out you design specs. I can live with slightly more expensive ink cartridges if everything else works.

By the way my next blog will be about getting a HP C4380 working with an Orange Livebox